JPG vs. GIF vs. PNG

JPEG/JPGShort for Joint Photographic Experts Group, the original name of the committee that wrote the standard. JPG is one of the image file formats supported on the Web. JPG is a lossy compression technique that is designed to compress color and grayscale continuous-tone images. The information that is discarded in the compression is information that the human eye cannot detect. JPG images support 16 million colors and are best suited for photographs and complex graphics. The user typically has to compromise on either the quality of the image or the size of the file. JPG does not work well on line drawings, lettering or simple graphics because there is not a lot of the image that can be thrown out in the lossy process, so the image loses clarity and sharpness.

GIFShort for Graphics Interchange Format, another of the graphics formats supported by the Web. Unlike JPG, the GIF format is a lossless compression technique and it supports only 256 colors. GIF is better than JPG for images with only a few distinct colors, such as line drawings, black and white images and small text that is only a few pixels high. With an animation editor, GIF images can be put together for animated images. GIF also supports transparency, where the background color can be set to transparent in order to let the color on the underlying Web page to show through. The compression algorithm used in the GIF format is owned by Unisys, and companies that use the algorithm are supposed to license the use from Unisys.**Unisys announced in 1995 that it would require people to pay licensing fees in order to use GIF. This does not mean that anyone who creates or uses a GIF image has to pay for it. Authors writing programs that output GIF images are subject to licensing fees.

PNGShort for Portable Network Graphics, the third graphics standard supported by the Web (though not supported by all browsers). PNG was developed as a patent-free answer to the GIF format but is also an improvement on the GIF technique. An image in a lossless PNG file can be 5%-25% more compressed than a GIF file of the same image. PNG builds on the idea of transparency in GIF images and allows the control of the degree of transparency, known as opacity. Saving, restoring and re-saving a PNG image will not degrade its quality. PNG does not support animation like GIF does.

Server Types

MTDL:Mean Time until Data Loss, in data storage, MTDL is the average time until a component failure can be expected to cause data loss.

Server PlatformsA term often used synonymously with operating system, a platform is the underlying hardware or software for a system and is thus the engine that drives the server.

Application ServersSometimes referred to as a type of middleware, application servers occupy a large chunk of computing territory between database servers and the end user, and they often connect the two.

Audio/Video ServersAudio/Video servers bring multimedia capabilities to Web sites by enabling them to broadcast streaming multimedia content.

Chat ServersChat servers enable a large number of users to exchange information in an environment similar to Internet newsgroups that offer real-time discussion capabilities.

Fax ServersA fax server is an ideal solution for organizations looking to reduce incoming and outgoing telephone resources but that need to fax actual documents.

FTP ServersOne of the oldest of the Internet services, File Transfer Protocol makes it possible to move one or more files securely between computers while providing file security and organization as well as transfer control.

Groupware ServersA groupware server is software designed to enable users to collaborate, regardless of location, via the Internet or a corporate intranet and to work together in a virtual atmosphere.

IRC ServersAn option for those seeking real-time discussion capabilities, Internet Relay Chat consists of various separate networks (or "nets") of servers that allow users to connect to each other via an IRC network.

List ServersList servers offer a way to better manage mailing lists, whether they be interactive discussions open to the public or one-way lists that deliver announcements, newsletters, or advertising.

Mail ServersAlmost as ubiquitous and crucial as Web servers, mail servers move and store mail over corporate networks (via LANs and WANs) and across the Internet.

News ServersNews servers act as a distribution and delivery source for the thousands of public news groups currently accessible over the USENET news network.

Proxy ServersProxy servers sit between a client program (typically a Web browser) and an external server (typically another server on the Web) to filter requests, improve performance, and share connections.

Telnet ServersA Telnet server enables users to log on to a host computer and perform tasks as if they're working on the remote computer itself.

Web ServersAt its core, a Web server serves static content to a Web browser by loading a file from a disk and serving it across the network to a user's Web browser. This entire exchange is mediated by the browser and server talking to each other using HTTP. Also read ServerWatch's Web Server Basics article.

proxy server

A server that sits between a client application, such as a Web browser, and a real server. It intercepts all requests to the real server to see if it can fulfill the requests itself. If not, it forwards the request to the real server.

Proxy servers have two main purposes:

Improve Performance: Proxy servers can dramatically improve performance for groups of users. This is because it saves the results of all requests for a certain amount of time. Consider the case where both user X and user Y access the World Wide Web through a proxy server. First user X requests a certain Web page, which we'll call Page 1. Sometime later, user Y requests the same page. Instead of forwarding the request to the Web server where Page 1 resides, which can be a time-consuming operation, the proxy server simply returns the Page 1 that it already fetched for user X. Since the proxy server is often on the same network as the user, this is a much faster operation. Real proxy servers support hundreds or thousands of users. The major online services such as America Online, MSN and Yahoo, for example, employ an array of proxy servers.

Filter Requests: Proxy servers can also be used to filter requests. For example, a company might use a proxy server to prevent its employees from accessing a specific set of Web sites.

spam filter

Spam is a term used to mean unsolicited, bulk e-mail that clogs your e-mail inbox and is often annoying. A spam filter is a program that will actually capture e-mails that look like spam before they are sent to your in-box.

A mail filter is a piece of software which takes an input of an email message. For its output, it might pass the message through unchanged for delivery to the user's mailbox, it might redirect the message for delivery elsewhere, or it might even throw the message away. Some mail filters are able to edit messages during processing.

A spam filter is a program that is used to detect unsolicited and unwanted email and prevent those messages from getting to a user's inbox. Like other types of filtering programs, a spam filter looks for certain criteria on which it bases judgments. For example, the simplest and earliest versions (such as the one available with Microsoft's Hotmail) can be set to watch for particular words in the subject line of messages and to exclude these from the user's inbox. This method is not especially effective, too often omitting perfectly legitimate messages (these are called false positives) and letting actual spam through. More sophisticated programs, such as Bayesian filters or other heuristic filters, attempt to identify spam through suspicious word patterns or word frequency.

Filter:

In computer programming, a filter is a program or section of code that is designed to examine each input or output request for certain qualifying criteria and then process or forward it accordingly. This term was used in Unix systems and is now used in other operating systems. A filter is "pass-through" code that takes input data, makes some specific decision about it and possible transformation of it, and passes it on to another program in a kind of pipeline. Usually, a filter does no input/output operation on its own. Filters are sometimes used to remove or insert headers or control characters in data.

In Windows operating systems, using Microsoft's Internet Server Application Programming Interface (ISAPI), you can write a filter (in the form of a dynamic link library or DLL file) that the operating system gives control each time there is a Hypertext Transport Control (HTTP) request. Such a filter might log certain or all requests or encrypt data or take some other selective action.

2) In telecommunications, a filter is a device that selectively sorts signals and passes through a desired range of signals while suppressing the others. This kind of filter is used to suppress noise or to separate signals into bandwidth channels.

3) In Photoshop and other graphic applications, a filter is a particular effect that can be applied to an image or part of an image. Filters can be fairly simple effects used to mimic traditional photographic filters (which are pieces of colored glass or gelatine placed over the lens to absorb specific wavelengths of light) or they can be complex programs used to create painterly effects. Software that diverts incoming spam. Spam filters can be installed in the user's machine or in the mail server, in which case, the user never receives the spam in the first place. Spam filtering can be configured to trap messages based on a variety of criteria, including sender's e-mail address, specific words in the subject or message body or by the type of attachment that accompanies the message. Address lists of habitual spammers (blacklists) are maintained by various organizations, ISPs and individuals as well as lists of acceptable addresses (whitelists) that might be misconstrued as spam. Spam filters reject blacklisted messages and accept whitelisted ones ).Sophisticated spam filters use AI techniques that look for key words and attempt to decipher their meaning in sentences in order to more effectively analyze the content and not trash a real message. Spam filters can also divert mail that comes to you as "Undisclosed Recipients," instead of having your e-mail address spelled out in the "to" or "cc" field. See ad blocker, spam trap and Bayesian filtering.

firewall

A system designed to prevent unauthorized access to or from a private network. Firewalls can be implemented in both hardware and software, or a combination of both. Firewalls are frequently used to prevent unauthorized Internet users from accessing private networks connected to the Internet, especially intranets. All messages entering or leaving the intranet pass through the firewall, which examines each message and blocks those that do not meet the specified security criteria.

There are several types of firewall techniques:

Packet filter: Looks at each packet entering or leaving the network and accepts or rejects it based on user-defined rules. Packet filtering is fairly effective and transparent to users, but it is difficult to configure. In addition, it is susceptible to IP spoofing.

Application gateway: Applies security mechanisms to specific applications, such as FTP and Telnet servers. This is very effective, but can impose a performance degradation.

Circuit-level gateway: Applies security mechanisms when a TCP or UDP connection is established. Once the connection has been made, packets can flow between the hosts without further checking.

Proxy server: Intercepts all messages entering and leaving the network. The proxy server effectively hides the true network addresses.

In practice, many firewalls use two or more of these techniques in concert.

A firewall is considered a first line of defense in protecting private information. For greater security, data can be encrypted.

In computer science, a firewall is a piece of hardware and/or software which functions in a networked environment to prevent some communications forbidden by the security policy, analogous to the function of firewalls in building construction. A firewall is also called a Border Protection Device (BPD), especially in NATO contexts, or packet filter in BSD contexts. A firewall has the basic task of controlling traffic between different zones of trust. Typical zones of trust include the Internet (a zone with no trust) and an internal network (a zone with high trust). The ultimate goal is to provide controlled connectivity between zones of differing trust levels through the enforcement of a security policy and connectivity model based on the least privilege principle.

Proper configuration of firewalls demands skill from the administrator. It requires considerable understanding of network protocols and of computer security. Small mistakes can render a firewall worthless as a security tool.

Digital signature

A digital signature (not to be confused with a digital certificate) is an electronic signature that can be used to authenticate the identity of the sender of a message or the signer of a document, and possibly to ensure that the original content of the message or document that has been sent is unchanged. Digital signatures are easily transportable, cannot be imitated by someone else, and can be automatically time-stamped. The ability to ensure that the original signed message arrived means that the sender cannot easily repudiate it later.

A digital signature can be used with any kind of message, whether it is encrypted or not, simply so that the receiver can be sure of the sender's identity and that the message arrived intact. A digital certificate contains the digital signature of the certificate-issuing authority so that anyone can verify that the certificate is real.

How It WorksAssume you were going to send the draft of a contract to your lawyer in another town. You want to give your lawyer the assurance that it was unchanged from what you sent and that it is really from you.

You copy-and-paste the contract (it's a short one!) into an e-mail note.

Using special software, you obtain a message hash (mathematical summary) of the contract.

You then use a private key that you have previously obtained from a public-private key authority to encrypt the hash.

The encrypted hash becomes your digital signature of the message. (Note that it will be different each time you send a message.) At the other end, your lawyer receives the message.

To make sure it's intact and from you, your lawyer makes a hash of the received message.

Your lawyer then uses your public key to decrypt the message hash or summary.

If the hashes match, the received message is valid. http://en.wikipedia.org/wiki/Digital_signature

Tele conferencing &videoconferencing

(1)To hold a conference via a

telephone or

network connection.

Computers have given new meaning to the term because they allow groups to do much more than just talk. Once a teleconference is established, the group can share

applications and mark up a common

whiteboard. There are many

teleconferencing applications that work over private networks. One of the first to operate over the

Internet is

Microsoft's NetMeeting.

(2) To deliver live events via satellite to geographically dispersed downlink sites.

Teleconferencing is a telephone call between more than two participants.

The most simple form of teleconferencing is using three-way calling to setup your own teleconference between yourself and two other particpants.

More advanced

PBX equipment can setup conference calls between more than three participants. Most businesses choose to use a teleconferencing service instead of purchasing and maintaining their own teleconferencing systems.

A teleconference is a telephone or video meeting between participants in two or more locations. Teleconferences are similar to telephone calls, but they can expand discussion to more than two people. Using teleconferencing in a planning process, members of a group can all participate in a conference with agency staff people.

Teleconferencing uses communications network technology to connect participants’ voices. In many cases, speaker telephones are used for conference calls among the participants. A two-way radio system can also be used. In some remote areas, satellite enhancement of connections is desirable.

Video conferencing can transmit pictures as well as voices through video cameras and computer modems. Video conferencing technology is developing rapidly, capitalizing on the increas ingly powerful capabilities of computers and telecommunications networks. Video conferencing centers and equipment are available for rent in many locations.

Why is it useful?

Teleconferencing reaches large or sparsely-populated areas. It offers opportunities for people in outlying regions to participate. People participate either from home or from a local teleconferencing center. In Alaska, where winter weather and long distances between municipal ities serve as roadblocks to public meetings, the State legislature has developed the Legislative Telecommunication Network (LTN). As an audio teleconference system, LTN can receive legis lative testimony from residents or hold meetings with constituents during "electronic office hours." Although its main center is in the capitol building, it has 28 full-time conference centers and 26 voluntary conference centers in homes or offices of people who store and operate equip ment for other local people. The system averages three teleconferences per day when the legis lature is in session.

Teleconferencing provides broader access to public meetings, as well as widening the reach of public involvement. It gives additional opportunities for participants to relate to agency staff and to each other while discussing issues and concerns from physically separate locations. It enables people in many different locations to receive information first-hand and simultaneously. (See Public Meetings/Hearings.)

A wider group of participants means a broader range of ideas and points of view. Audio interaction makes dialogue more lively, personal, and interesting. Teleconferencing provides an immediate response to concerns or issues. It enables people with disabilities, parents with childcare conflicts, the elderly, and others to participate without having to travel. (See Americans with Disabilities.) In response to requests from residents in remote rural areas, the Oregon Department of Transportation (DOT) held two-way video teleconferences for its statewide Transportation Improvement Plan update. Two special meetings were broadcast by a private non-profit organization that operates ED-NET, a two-way teleconferencing system. ED-NET provided a teleconference among staff members in one of the DOT’s five regional offices and participants at central transmission facilities in a hospital and a community college in eastern Oregon.

Teleconferencing saves an agency time and travel costs. Without leaving their home office, staff members can have effective meetings that reach several people who might not otherwise be able to come together. Teleconferencing reduces the need for holding several meetings in differ ent geographic areas, thereby decreasing public involvement costs, particularly staff time and travel. Teleconferencing often enables senior officials to interact with local residents when such an opportunity would not exist otherwise, due to distance and schedule concerns.

Teleconferencing saves people money. It saves travel time, transportation cost, babysitter fees, and lost work time. New York City’s Minerva Apartment Towers set up a closed-circuit telecon ference transmission between two apartment buildings for residents to discuss issues within their site. Residents wishing to speak went to a room in their own building to make comments over a link-up between the apartment buildings.

Teleconferencing saves time by reaching more people with fewer meetings. A teleconference may reach more people in one session than in several sessions held in the field over several weeks. Usually, it is difficult to schedule more than two or three public meetings in the field within one week, due to staff commitments and other considerations. However, teleconference connections to several remote locations save several days or weeks of agency time and facilitate a fast-track schedule.

Does it have special uses?

Teleconferencing is useful when an issue is State- or regionwide. The World Bank uses moderated electronic conferences to identify best public involvement practices from front-line staff. The discussion focuses around fleshing out and sharing ideas so that practitioners in different locations can learn from the experiences of others around the world.

Teleconferencing helps increase the number of participants. People may be reluctant to travel to a meeting because of weather conditions, poor highway or transit access, neighborhood safety concerns, or other problems. Teleconferencing offers equal opportunity for people to par ticipate, thus allowing more points of view to emerge, revealing areas of disagreement, and enabling people to exchange views and ask questions freely.

Teleconferencing is used for training. It opens up training hours and availability of courses for people unable to take specialized classes because of time constraints and travel costs. The National Transit Institute held a nationally broadcast session answering questions about require ments for Federal major investment studies (MIS). Over 1,700 people met at 89 teleconferencing sites to participate in the meeting. Feedback from participants was overwhelmingly in favor of the usefulness and practicality of the session.

Teleconferencing is used for networking among transportation professionals on public involvement and other topics. North Carolina State University sponsored a national teleconference on technologies for transportation describing applications of three- and four-dimensional computer graphics technologies. They have been found helpful in facilitating public involvement and environmental analysis.

Who participates? and how?

Anyone can participate. Teleconferencing broadens participation with its wide geographical coverage. People living in remote areas can join in conversations. Participation becomes available even for the mobility-restricted, those without easy access to transportation, the disadvantaged, and the elderly. Poor or uneducated people, however, may be reluctant to parti cipate for cultural reasons or because of lack of access. (See Americans with Disabilities; Ethnic, Minority, and Low-income Groups.)

Participants gather at two or more locations and communicate via phone or video. The event requires planning, so that participants are present at the appointed time at their divergent locations.

Participants should know what to expect during the session. A well-publicized agenda is required. It is helpful to brief participants so they understand the basic process and maximize the use of time for their participation. For example, basic concerns like speaking clearly or waiting to speak in turn are both elements of a successful teleconference-based meeting.

How do agencies use teleconferencing?

Teleconferencing elicits comments and opinions from the public. These comments and opinions become part of a record of public involvement. Agencies should plan to respond to comments and community input and to address specific concerns.

Teleconferencing offers immediate feedback from agency staff to the community. This feedback is a special benefit for participants in both time savings and satisfaction with agency actions. To assure immediacy, agencies must have staff available to respond to questions at the teleconference.

An agency can tailor its efforts to respond to a range of needs or circumstances, with broad input from diverse geographical and often underserved populations. The Montana DOT will use a teleconferencing network in the state as it updates its statewide plan.

Agencies use teleconferencing with individuals or with multiple groups. The range of participants varies from simple meetings between two or three people to meetings involving several people at many locations. Simple meetings can be somewhat informal, with participants free to discuss points and ask questions within a limited time.

Who leads a teleconference?

A trained facilitator, moderator, or group leader runs the meeting. A moderator needs to orchestrate the orderly flow of conversation by identifying the sequence of speakers. A staff person can be trained to open and lead the teleconference. (See Facilitation.)

Community people can lead the conversation. The moderator need not be an agency staff person. If the teleconference is taking place at the request of community people, it is appropriate that a community resident lead the session. Agency staff members should feel free to ask questions of community people to obtain a complete understanding of their point of view.

Each individual meeting site must have a person in charge to prevent the conversation from becoming chaotic. A teleconferencing facility coordinator can train agency staff or community people to lead the process. Appointment of an individual to guide conversation from a specific site should be informally carried out. Community groups may want to have a role in this appointment.

What are the costs?

Teleconferencing costs vary, depending on the application. The costs of installing a two-way telephone network are modest. For complex installations, including television, radio, or satellite connections, costs are significantly higher. Hiring outside help to coordinate equipment pur chases or design an event adds to the expense.

For modest teleconferencing efforts, equipment and facilities are the principal costs. Higher costs are associated with higher performance levels of equipment, more transmission facilities, or more locations. Agencies may be able to rent a facility or set one up in-house. The San Diego Association of Governments is building its own central teleconferencing facility to provide in creased opportunities for the agency to use this technique.

It is possible to share teleconferencing costs among organizations. Many States have teleconferencing capabilities in State colleges. States may have non-profit organizations with telecon ferencing capabilities. Outside resources include cable television stations or donated use of private company facilities. Agency staff time devoted to the event may be a significant expense.

How is teleconferencing organized?

One person should be in charge of setting up a teleconference. That individual makes preparatory calls to each participant, establishes a specific time for the teleconference, and makes the calls to assemble the group. The same person should be in charge of setting an agenda based on issues brought up by individual participants.

Equipment for a telephone conference is minimal. Speaker phones allow several people to use one phone to listen to and speak with others, but they are not required. Individuals can be contacted on their extensions and participate fully in the conversations. While the basic equip ment does not require an audiovisual specialist to operate, a technician may be required to set up equipment and establish telecommunications or satellite connections, particularly in more sophis ticated applications.

Video conferencing needs are more complex. Basic equipment can involve:

personal computers;

a main computer control system;

one or more dedicated telephone lines or a satellite hook-up;

a television or computer monitor for each participant or group of participants; and

a video camera for each participant or group of participants.

More sophisticated facilities and equipment are required if a number of locations are inter connected.

An individual or group rents a private or public video conference room in many cities. Private companies often have in-house video conference rooms and systems. The Arizona DOT is considering establishing a mobile teleconferencing facility that can travel throughout the State. Many public facilities, particularly State institutions such as community colleges, have set up teleconference facilities.

Teleconferencing can kick off a project or planning effort and continue throughout the process. Teleconferences are targeted to a particular topic or address many areas, depending on the need for public input and participation.

Adequate preparation is critical to success and optimum effectiveness of a teleconference. The funding source for the teleconference must be identified and a moderator designated. The time and length of the teleconference must be established and an agenda prepared to organize the meeting’s content and times for speakers to present their views. Participants should be invited and attendance confirmed. This is a critical step, since there is little flexibility in canceling or postponing the event -- there just are no second chances. Also, less than full participation meansthat important voices are not heard.

It is important to provide materials in advance. These include plans of alternatives, reports, evaluation matrices, cross-sections, or other visuals. (See Public Information Materials.) For video conferences, these materials may be on-screen but are usually difficult to read unless a participant has a printed document for reference. (See Video Techniques.) A moderator must be prepared to address all concerns covered by the written materials. Preparation smooths the way for all to participate in the teleconference. Without adequate preparation, teleconferences may need to be repeated, especially if all questions are not addressed thoroughly.

The technical set-up is crucial. Teleconferencing equipment and its several locations are key to the event’s success. Equipment must be chosen for maximum effect and efficiency in conducting a meeting between a central location and outlying stations.

Equipment must be distributed well. Because equipment is needed at each site, housing facilities for equipment must be identified. Seating needs to be arranged to maximize participation. A test-run of the equipment and the set-up for participants is important. The moderator may want to arrive early and practice using the equipment.

The moderator sets ground rules for orderly presentation of ideas. The moderator introduces participants in each location and reviews the objectives and time allotted for the meeting. Participants are urged to follow the moderator’s guidance for etiquette in speaking. They should follow basic rules: speak clearly, avoid jargon, and make no extraneous sounds, such as cough ing, drumming fingers, or side conversations.

The meeting must follow the agenda. It is the moderator’s responsibility to keep the teleconference focused. In doing so, she or he must be organized, fair, objective, and open. The confer ence must be inclusive, providing an opportunity for all to register their views. The moderator must keep track of time to assure that the agenda is covered and time constraints are observed. It may be appropriate to have a staff person on hand to record action items, priorities, and the results of the teleconference.

How is it used with other techniques?

Teleconferencing is part of a comprehensive public involvement strategy. It can complement public information materials, smaller group meetings, open houses, and drop-in centers. (See Public Information Materials; Small Group Techniques; Open Forum Hearings/Open Houses; Drop-in Centers.) The Minneapolis–St. Paul Metropolitan Council initiated a partner ship with Twin City Computer Network to allow people to participate in electronic forums, obtain publications, reports, news, research findings, and local maps, and participate in surveys. (See Public Opinion Surveys.)

Teleconferencing participants can serve as a community advisory committee or task force meeting. It can cover simple items quickly, avoiding the need for a face-to-face meeting. For major issues, it is a way to prepare participants for an upcoming face-to-face discussion by outlining agendas, listing potential attendees, or describing preparatory work that is needed. (See Civic Advisory Committees; Collaborative Task Forces.)

Teleconferencing is a method for taking surveys of neighborhood organizations. It helps demonstrate the array of views within an organization and helps local organizations meet and determine positions prior to a survey of their views. (See Public Opinion Surveys.)

Teleconferencing is used in both planning and project development. It is useful during visioning processes, workshops, public information meetings, and roundtables. (See Visioning; Conferences, Workshops, and Retreats.)

What are the drawbacks?

Teleconferences are somewhat formal events that need prior planning for maximum usefulness. Although they require pre-planning and careful timing, teleconferences are conducted informally to encourage participation and the exchange of ideas.

A large number of people is difficult to manage in a single teleconference, with individuals attempting to interact and present their points of view. One-on-one dialogue with a few people is usually preferable. Widely divergent topics are also difficult to handle with a large number of people participating in a teleconference.

Costs can be high. Costs are incurred in equipment, varying sites for connections, transmission, and moderator training. Substantial agency staff time to coordinate and lead is likely.

Teleconferences take time to organize. Establishing technical links, identifying sites and constituencies, and coordinating meetings can be time-consuming. Materials need to be prepared and disseminated. However, teleconferencing saves time by being more efficient than in-person meetings, and the savings may offset staff efforts and other costs.

Staffing needs can be significant. Personnel such as technicians and agency staff to set up and coordinate meetings are required. Training to conduct a conference is necessary. However, staff time and resources may be significantly less than if personnel have to travel to several meetings at distant locations.

Community people are alienated if a meeting is poorly implemented or if anticipated goals are not met. People need to be assured that the project and planning staff is mindful of their concerns. Technical and management difficulties, such as poor coordination between speakers or people being misunderstood or not heard, result in bad feelings.

Teleconferencing reduces opportunities for face-to-face contact between participants and proponents of plans or projects. It cannot replace a desirable contact at individual meetings between stakeholders and agency staff in local sites. Effective public involvement includes meetings in the community to obtain a feel for the local population and issues. (See Public Meetings/Hearings; Non-traditional Meeting Places and Events.) A teleconference supplements rather than replaces direct contact with local residents and neighborhoods. Video conferencing, by contrast, enhances opportunities for face-to-face exchange.

The goals of a teleconference must be clear and manageable to avoid a potential perception of wasted time or frivolous expenditures.

Is teleconferencing flexible?

Teleconferencing lacks flexibility of location and timing. A teleconference among several people must have a well-established location, time, and schedule, publicized prior to the event. An agenda must be set well in advance of the meeting, with specific times set aside to cover all topics, so that people at different sites can follow the format of the meeting. The New York State DOT held a teleconference/public hearing for the draft State Transportation Plan. The well-defined agenda scheduled registration and a start time that coincided with a one-hour live telecast from the State capital, which included a roundtable discussion with the DOT Commissioner.

Videoconferencing can be flexible if it is a talk arranged between two locations. With few people, it may be as simple to arrange as a telephone call. With additional participants, it becomes less flexible.

Teleconferencing offers opportunities for participants who can’t travel to become involved. Enabling people to stay home or drive to a regional site offers flexibility in child care, transporta tion, and other factors that affect meeting attendance.

When is it used most effectively?

Teleconferencing is effective when participants have difficulty attending a meeting. This occurs when people are widely dispersed geographically and cannot readily meet with agency staff. Teleconferencing also serves people with disabilities, the elderly, and others who may have difficulties with mobility. (See Americans with Disabilities.)

Teleconferencing is effective when it focuses on specific action items that deserve comment. Teleconferences aid in prioritizing issues and discussing immediate action items. Detailed, wide-ranging discussions may be more properly handled with written materials and in-person interaction.

Teleconferencing helps give all participants an equal footing in planning and project development. Teleconferences overcome geographic dispersal and weather problems to aid contact with agency staff.

Multipoint

videoconferencing allows three or more participants to sit in a

virtual conference room and communicate as if they were sitting right next to each other. Until the mid 90s, the

hardware costs made videoconferencing prohibitively expensive for most organizations, but that situation is changing rapidly. Many analysts believe that videoconferencing will be one of the fastest-growing segments of the computer industry in the latter half of the decade.

load and stress testing

load and stress testing

1.Stress testing is subjecting a system to an unreasonable load while denying it the resources (e.g., RAM, disc, mips, interrupts, etc.) needed to process that load. The idea is to stress a system tothe breaking point in order to find bugs that will make that break potentially harmful. The system is not expected to process the overload without adequate resources, but to behave (e.g., fail) in a decent manner (e.g., not corrupting or losing data). Bugs and failuremodes discovered under stress testing may or may not be repaireddepending on the application, the failure mode, consequences, etc. The load (incoming transaction stream) in stress testing is often deliberately distorted so as to force the system into resource depletion.

2. Load testing is subjecting a system to a statistically representative (usually) load. The two main reasons for using such loads is in support of software reliability testing and in performance testing. The term "load testing" by itself is too vagueand imprecise to warrant use. For example, do you mean representativeload," "overload," "high load," etc. In performance testing, load isvaried from a minimum (zero) to the maximum level the system can sustain without running out of resources or having, transactions suffer (application-specific) excessive delay.

3. A third use of the term is as a test whose objective is to determine the maximum sustainable load the system can handle. In this usage, "load testing" is merely testing at the highest transaction arrival rate in performance testing.unit Testing ... The definitions of integration tests are after Leung and White.

**********

Unit. The smallest compilable component. A unit typically is the work of one programmer (At least in principle). As defined, it does not include any called sub-components (for procedural languages) or communicating components in general.

Unit Testing: in unit testing called components (or communicating components) are replaced with stubs, simulators, or trusted components. Calling components are replaced with drivers or trusted super-components. The unit is tested in isolation.

component: a unit is a component. The integration of one or more components is a component.

Note: The reason for "one or more" as contrasted to "Two or more" is to allow for components that call themselves recursively.

component testing: the same as unit testing except that all stubs and simulators are replaced with the real thing.

Two components (actually one or more) are said to be integrated when: a. They have been compiled, linked, and loaded together. b. They have successfully passed the integration tests at the interface between them.

Thus, components A and B are integrated to create a new, larger, component (A,B). Note that this does not conflict with the idea of incremental integration -- it just means that A is a big component and B, the component added, is a small one.

Integration testing: carrying out integration tests.

Integration tests (After Leung and White) for procedural languages. This is easily generalized for OO languages by using the equivalent constructs for message passing. In the following, the word "call" is to be understood in the most general sense of a data flow and is not restricted to just formal subroutine calls and returns -- for example, passage of data through global data structures and/or the use of pointers.

Let A and B be two components in which A calls B. Let Ta be the component level tests of A Let Tb be the component level tests of B Tab The tests in A's suite that cause A to call B. Tbsa The tests in B's suite for which it is possible to sensitize A -- the inputs are to A, not B. Tbsa + Tab == the integration test suite (+ = union).

Note: Sensitize is a technical term. It means inputs that will cause a routine to go down a specified path. The inputs are to A. Not every input to A will cause A to traverse a path in which B is called. Tbsa is the set of tests which do cause A to follow a path in which B is called. The outcome of the test of B may or may not be affected.

There have been variations on these definitions, but the key point is that it is pretty darn formal and there's a goodly hunk of testing theory, especially as concerns integration testing, OO testing, and regression testing, based on them.

As to the difference between integration testing and system testing. System testing specifically goes after behaviors and bugs that are properties of the entire system as distinct from properties attributable to components (unless, of course, the component in question is the entire system). Examples of system testing issues: resource loss bugs, throughput bugs, performance, security, recovery, transaction synchronization bugs (often misnamed "timing bugs").

*******

Black-box and white-box are test design methods. Black-box test designtreats the system as a "black-box", so it doesn't explicitly useknowledge of the internal structure. Black-box test design is usuallydescribed as focusing on testing functional requirements. Synonyms forblack-box include: behavioral, functional, opaque-box, andclosed-box. White-box test design allows one to peek inside the "box",and it focuses specifically on using internal knowledge of the softwareto guide the selection of test data. Synonyms for white-box include:structural, glass-box and clear-box.

While black-box and white-box are terms that are still in popular use,many people prefer the terms "behavioral" and "structural". Behavioraltest design is slightly different from black-box test design becausethe use of internal knowledge isn't strictly forbidden, but it's stilldiscouraged. In practice, it hasn't proven useful to use a single testdesign method. One has to use a mixture of different methods so thatthey aren't hindered by the limitations of a particular one. Some callthis "gray-box" or "translucent-box" test design, but others wish we'dstop talking about boxes altogether.

It is important to understand that these methods are used during thetest design phase, and their influence is hard to see in the tests oncethey're implemented. Note that any level of testing (unit testing,system testing, etc.) can use any test design methods. Unit testing isusually associated with structural test design, but this is becausetesters usually don't have well-defined requirements at the unit levelto validate.

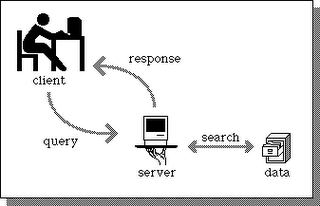

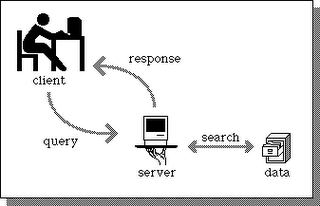

CLIENT/SERVER MODEL OF COMPUTING

The term client/server was first used in the 1980s in reference to personal computers (PCs) on a network. The actual client/server model started gaining acceptance in the late 1980s. The client/server software architecture is a versatile, message-based and modular infrastructure that is intended to improve usability, flexibility, interoperability, and scalability as compared to centralized, mainframe, time sharing computing

The client's responsibility is usually to:

Handle the user interface.

Translate the user's request into the desired protocol.

Send the request to the server.

Wait for the server's response.

Translate the response into "human-readable" results.

Present the results to the user.

The server's functions include:

Listen for a client's query.

Process that query.

Return the results back to the client.

A typical client/server interaction goes like this:

The user runs client software to create a query.

The client connects to the server.

The client sends the query to the server.

The server analyzes the query.

The server computes the results of the query.

The server sends the results to the client.

The client presents the results to the user.

Repeat as necessary.

Flexible user interface development is the most obvious advantage of client/server computing. It is possible to create an interface that is independent of the server hosting the data. Therefore, the user interface of a client/server application can be written on a Macintosh and the server can be written on a mainframe. Clients could be also written for DOS- or UNIX-based computers. This allows information to be stored in a central server and disseminated to different types of remote computers. Since the user interface is the responsibility of the client, the server has more computing resources to spend on analyzing queries and disseminating information. This is another major advantage of client/server computing; it tends to use the strengths of divergent computing platforms to create more powerful applications. Although its computing and storage capabilities are dwarfed by those of the mainframe, there is no reason why a Macintosh could not be used as a server for less demanding applications.

In short, client/server computing provides a mechanism for disparate computers to cooperate on a single computing task.

Client/server describes the relationship between two computer programs in which one program, the client, makes a service request from another program, the server, which fulfills the request. Although the client/server idea can be used by programs within a single computer, it is a more important idea in a network. In a network, the client/server model provides a convenient way to interconnect programs that are distributed efficiently across different locations. Computer transactions using the client/server model are very common. For example, to check your bank account from your computer, a client program in your computer forwards your request to a server program at the bank. That program may in turn forward the request to its own client program that sends a request to a database server at another bank computer to retrieve your account balance. The balance is returned back to the bank data client, which in turn serves it back to the client in your personal computer, which displays the information for you.

The client/server model has become one of the central ideas of network computing. Most business applications being written today use the client/server model. So does the Internet's main program, TCP/IP. In marketing, the term has been used to distinguish distributed computing by smaller dispersed computers from the "monolithic" centralized computing of mainframe computers. But this distinction has largely disappeared as mainframes and their applications have also turned to the client/server model and become part of network computing.

In the usual client/server model, one server, sometimes called a daemon, is activated and awaits client requests. Typically, multiple client programs share the services of a common server program. Both client programs and server programs are often part of a larger program or application. Relative to the Internet, your Web browser is a client program that requests services (the sending of Web pages or files) from a Web server (which technically is called a Hypertext Transport Protocol or HTTP server) in another computer somewhere on the Internet. Similarly, your computer with TCP/IP installed allows you to make client requests for files from File Transfer Protocol (FTP) servers in other computers on the Internet.

Other program relationship models included master/slave, with one program being in charge of all other programs, and peer-to-peer, with either of two programs able to initiate a transaction.

Mean Time Between Failure (MTBF) -RAID

In 1987, Patterson, Gibson and Katz at the University of California Berkeley,

published a paper entitled "A Case for Redundant Arrays of Inexpensive Disks (RAID)" . This paper described various types of disk arrays, referred to by the acronym RAID. The basic idea of RAID was to combine multiple small, inexpensive disk drives into an array of disk drives which yields performance exceeding that of a Single Large Expensive Drive (SLED). Additionally, this array of drives appears to the computer as a single logical storage unit or drive.

The Mean Time Between Failure (MTBF) of the array will be equal to the MTBF of an individual drive, divided by the number of drives in the array. Because of this, the MTBF of an array of drives would be too low for many application requirements. However, disk arrays can be made fault-tolerant by redundantly storing information in various ways.

Five types of array architectures, RAID-1 through RAID-5, were defined by the Berkeley paper, each providing disk fault-tolerance and each offering different trade-offs in features and performance. In addition to these five redundant array architectures, it has become popular to refer to a non-redundant array of disk drives as a RAID-0 array.

Data Striping

Fundamental to RAID is "striping", a method of concatenating multiple drives into one logical storage unit. Striping involves partitioning each drive's storage space into stripes which may be as small as one sector (512 bytes) or as large as several megabytes. These stripes are then interleaved round-robin, so that the combined space is composed alternately of stripes from each drive. In effect, the storage space of the drives is shuffled like a deck of cards. The type of application environment, I/O or data intensive, determines whether large or small stripes should be used.

Most multi-user operating systems today, like NT, Unix and Netware, support overlapped disk I/O operations across multiple drives. However, in order to maximize throughput for the disk subsystem, the I/O load must be balanced across all the drives so that each drive can be kept busy as much as possible. In a multiple drive system without striping, the disk I/O load is never perfectly balanced. Some drives will contain data files which are frequently accessed and some drives will only rarely be accessed. In I/O intensive environments, performance is optimized by striping the drives in the array with stripes large enough so that each record potentially falls entirely within one stripe. This ensures that the data and I/O will be evenly distributed across the array, allowing each drive to work on a different I/O operation, and thus maximize the number of simultaneous I/O operations which can be performed by the array.

In data intensive environments and single-user systems which access large records, small stripes (typically one 512-byte sector in length) can be used so that each record will span across all the drives in the array, each drive storing part of the data from the record. This causes long record accesses to be performed faster, since the data transfer occurs in parallel on multiple drives. Unfortunately, small stripes rule out multiple overlapped I/O operations, since each I/O will typically involve all drives. However, operating systems like DOS which do not allow overlapped disk I/O, will not be negatively impacted. Applications such as on-demand video/audio, medical imaging and data acquisition, which utilize long record accesses, will achieve optimum performance with small stripe arrays.

A potential drawback to using small stripes is that synchronized spindle drives are required in order to keep performance from being degraded when short records are accessed. Without synchronized spindles, each drive in the array will be at different random rotational positions. Since an I/O cannot be completed until every drive has accessed its part of the record, the drive which takes the longest will determine when the I/O completes. The more drives in the array, the more the average access time for the array approaches the worst case single-drive access time. Synchronized spindles assure that every drive in the array reaches its data at the same time. The access time of the array will thus be equal to the average access time of a single drive rather than approaching the worst case access time.

The different RAID levels

RAID-0

RAID Level 0 is not redundant, hence does not truly fit the "RAID" acronym. In level 0, data is split across drives, resulting in higher data throughput. Since no redundant information is stored, performance is very good, but the failure of any disk in the array results in data loss. This level is commonly referred to as striping.

RAID-1

RAID Level 1 provides redundancy by writing all data to two or more drives. The performance of a level 1 array tends to be faster on reads and slower on writes compared to a single drive, but if either drive fails, no data is lost. This is a good entry-level redundant system, since only two drives are required; however, since one drive is used to store a duplicate of the data, the cost per megabyte is high. This level is commonly referred to as mirroring.

RAID-2

RAID Level 2, which uses Hamming error correction codes, is intended for use with drives which do not have built-in error detection. All SCSI drives support built-in error detection, so this level is of little use when using SCSI drives.

RAID-3

RAID Level 3 stripes data at a byte level across several drives, with parity stored on one drive. It is otherwise similar to level 4. Byte-level striping requires hardware support for efficient use.

RAID-4

RAID Level 4 stripes data at a block level across several drives, with parity stored on one drive. The parity information allows recovery from the failure of any single drive. The performance of a level 4 array is very good for reads (the same as level 0). Writes, however, require that parity data be updated each time. This slows small random writes, in particular, though large writes or sequential writes are fairly fast. Because only one drive in the array stores redundant data, the cost per megabyte of a level 4 array can be fairly low.

RAID-5

RAID Level 5 is similar to level 4, but distributes parity among the drives. This can speed small writes in multiprocessing systems, since the parity disk does not become a bottleneck. Because parity data must be skipped on each drive during reads, however, the performance for reads tends to be considerably lower than a level 4 array. The cost per megabyte is the same as for level 4.

Summary:

RAID-0 is the fastest and most efficient array type but offers no fault-tolerance.

RAID-1 is the array of choice for performance-critical, fault-tolerant environments. In addition, RAID-1 is the only choice for fault-tolerance if no more than two drives are desired.

RAID-2 is seldom used today since ECC is embedded in almost all modern disk drives.

RAID-3 can be used in data intensive or single-user environments which access long sequential records to speed up data transfer. However, RAID-3 does not allow multiple I/O operations to be overlapped and requires synchronized-spindle drives in order to avoid performance degradation with short records.

RAID-4 offers no advantages over RAID-5 and does not support multiple simultaneous write operations.

RAID-5 is the best choice in multi-user environments which are not write performance sensitive. However, at least three, and more typically five drives are required for RAID-5 arrays.

Possible aproaches to RAIDHardware RAIDThe hardware based system manages the RAID subsystem independently from the host and presents to the host only a single disk per RAID array. This way the host doesn't have to be aware of the RAID subsystems(s).

The controller based hardware solutionDPT's SCSI controllers are a good example for a controller based RAID solution.The intelligent contoller manages the RAID subsystem independently from the host. The advantage over an external SCSI---SCSI RAID subsystem is that the contoller is able to span the RAID subsystem over multiple SCSI channels and and by this remove the limiting factor external RAID solutions have: The transfer rate over the SCSI bus.

The external hardware solution (SCSI---SCSI RAID)An external RAID box moves all RAID handling "intelligence" into a contoller that is sitting in the external disk subsystem. The whole subsystem is connected to the host via a normal SCSI controller and apears to the host as a single or multiple disks.This solution has drawbacks compared to the contoller based solution: The single SCSI channel used in this solution creates a bottleneck. Newer technologies like Fiber Channel can ease this problem, especially if they allow to trunk multiple channels into a Storage Area Network.4 SCSI drives can already completely flood a parallel SCSI bus, since the average transfer size is around 4KB and the command transfer overhead - which is even in Ultra SCSI still done asynchonously - takes most of the bus time.

Software RAID

The MD driver in the Linux kernel is an example of a RAID solution that is completely hardware independent.The Linux MD driver supports currently RAID levels 0/1/4/5 + linear mode.

Under Solaris you have the Solstice DiskSuite and Veritas Volume Manager which offer RAID-0/1 and 5.

Adaptecs AAA-RAID controllers are another example, they have no RAID functionality whatsoever on the controller, they depend on external drivers to provide all external RAID functionality. They are basically only multiple single AHA2940 controllers which have been integrated on one card. Linux detects them as AHA2940 and treats them accordingly.Every OS needs its own special driver for this type of RAID solution, this is error prone and not very compatible.

Hardware vs. Software RAIDJust like any other application, software-based arrays occupy host system memory, consume CPU cycles and are operating system dependent. By contending with other applications that are running concurrently for host CPU cycles and memory, software-based arrays degrade overall server performance. Also, unlike hardware-based arrays, the performance of a software-based array is directly dependent on server CPU performance and load.

Except for the array functionality, hardware-based RAID schemes have very little in common with software-based implementations. Since the host CPU can execute user applications while the array adapter's processor simultaneously executes the array functions, the result is true hardware multi-tasking. Hardware arrays also do not occupy any host system memory, nor are they operating system dependent.

Hardware arrays are also highly fault tolerant. Since the array logic is based in hardware, software is NOT required to boot. Some software arrays, however, will fail to boot if the boot drive in the array fails. For example, an array implemented in software can only be functional when the array software has been read from the disks and is memory-resident. What happens if the server can't load the array software because the disk that contains the fault tolerant software has failed? Software-based implementations commonly require a separate boot drive, which is NOT included in the array.

Dedicated Server

In the Web hosting business, a dedicated server refers to the rental and exclusive use of a computer that includes a Web server, related software, and connection to the Internet, housed in the Web hosting company's premises. A dedicated server is usually needed for a Web site (or set of related company sites) that may develop a considerable amount of traffic. The server can usually be configured and operated remotely from the client company.

A dedicated server is a single computer in a network reserved for serving the needs of the network. For example, some networks require that one computer be set aside to manage communications between all the other computers. A dedicated server could also be a computer that manages printer resources. Note, however, that not all servers are dedicated. In some networks, it is possible for a computer to act as a server and perform other functions as well.

A standby server is a second server that can be brought online if the primary production server fails. The standby server contains a copy of the databases on the primary server. A standby server can also be used when a primary server becomes unavailable due to scheduled maintenance. For example, if the primary server needs a hardware or software upgrade, the standby server can be used.

a standby SQL Server will have a copy of the main production database, which is as closely in synch with the original database as possible, in terms of transactions.

Since SQL Server provides so many options for copying data between different databases and servers, novice/inexperienced DBAs often get confused, as to which option to go with, for maintaining a standby server. Some of those options include Data Transformation Services (DTS), BCP, Replication, Clustering, Backup/Restore, Logshipping.

Redundant Array of Independent (or Inexpensive) Disks,

A category of disk drives that employ two or more drives in combination for fault tolerance and performance. RAID disk drives are used frequently on servers but aren't generally necessary for personal computers.

There are number of different RAID levels:

1). Level 0 -- Striped Disk Array without Fault Tolerance: Provides data striping (spreading out blocks of each file across multiple disk drives) but no redundancy. This improves performance but does not deliver fault tolerance. If one drive fails then all data in the array is lost. RAID-0: This technique has striping but no redundancy of data. It offers the best performance but no fault-tolerance.

2). Level 1 -- Mirroring and Duplexing: Provides disk mirroring. Level 1 provides twice the read transaction rate of single disks and the same write transaction rate as single disks. There are at least nine types of RAID plus a non-redundant array (RAID-0):RAID-1: This type is also known as disk mirroring and consists of at least two drives that duplicate the storage of data. There is no striping. Read performance is improved since either disk can be read at the same time. Write performance is the same as for single disk storage. RAID-1 provides the best performance and the best fault-tolerance in a multi-user system.

3). Level 2 -- Error-Correcting Coding: Not a typical implementation and rarely used, Level 2 stripes data at the bit level rather than the block level.RAID-2: This type uses striping across disks with some disks storing error checking and correcting (ECC) information. It has no advantage over RAID-3.

4). Level 3 -- Bit-Interleaved Parity: Provides byte-level striping with a dedicated parity disk. Level 3, which cannot service simultaneous multiple requests, also is rarely used.

RAID-3: This type uses striping and dedicates one drive to storing

parity information. The embedded error checking (ECC) information is used to detect errors. Data recovery is accomplished by calculating the exclusive OR (XOR) of the information recorded on the other drives. Since an I/O operation addresses all drives at the same time, RAID-3 cannot overlap I/O. For this reason, RAID-3 is best for single-user systems with long record applications.

RAID-4: This type uses large stripes, which means you can read records from any single drive. This allows you to take advantage of overlapped I/O for read operations. Since all write operations have to update the parity drive, no I/O overlapping is possible. RAID-4 offers no advantage over RAID-5.

5) Level 4 -- Dedicated Parity Drive: A commonly used implementation of RAID, Level 4 provides block-level striping (like Level 0) with a parity disk. If a data disk fails, the parity data is used to create a replacement disk. A disadvantage to Level 4 is that the parity disk can create write bottlenecks.

RAID-4: This type uses large stripes, which means you can read records from any single drive. This allows you to take advantage of overlapped I/O for read operations. Since all write operations have to update the parity drive, no I/O overlapping is possible. RAID-4 offers no advantage over RAID-5.

6) Level 5 -- Block Interleaved Distributed Parity: Provides data striping at the byte level and also stripe error correction information. This results in excellent performance and good fault tolerance. Level 5 is one of the most popular implementations of RAID.

RAID-5: This type includes a rotating parity array, thus addressing the write limitation in RAID-4. Thus, all read and write operations can be overlapped. RAID-5 stores parity information but not redundant data (but parity information can be used to reconstruct data). RAID-5 requires at least three and usually five disks for the array. It's best for multi-user systems in which performance is not critical or which do few write operations. 7) Level 6 -- Independent Data Disks with Double Parity: Provides block-level striping with parity data distributed across all disks.

RAID-6: This type is similar to RAID-5 but includes a second parity scheme that is distributed across different drives and thus offers extremely high fault- and drive-failure tolerance.

8) Level 7: A trademark of

Storage Computer Corporation that adds caching to Levels 3 or 4. RAID-7: This type includes a real-time embedded operating system as a controller, caching via a high-speed bus, and other characteristics of a stand-alone computer. One vendor offers this system.

9) Level 0+1 ? A Mirror of Stripes: Not one of the original RAID levels, two RAID 0 stripes are created, and a RAID 1 mirror is created over them. Used for both replicating and sharing data among disks.

Level 10 ? A Stripe of Mirrors: Not one of the original RAID levels, multiple RAID 1 mirrors are created, and a RAID 0 stripe is created over these.

RAID-10: Combining RAID-0 and RAID-1 is often referred to as RAID-10, which offers higher performance than RAID-1 but at much higher cost. There are two subtypes: In RAID-0+1, data is organized as stripes across multiple disks, and then the striped disk sets are mirrored. In RAID-1+0, the data is mirrored and the mirrors are striped.

10) RAID S: EMC Corporation's proprietary striped parity RAID system used in its Symmetrix storage systems. RAID-S (also known as Parity RAID): This is an alternate, proprietary method for striped parity RAID from EMC Symmetrix that is no longer in use on current equipment. It appears to be similar to RAID-5 with some performance enhancements as well as the enhancements that come from having a high-speed disk cache on the disk array.11) RAID-50 (or RAID-5+0): This type consists of a series of RAID-5 groups and striped in RAID-0 fashion to improve RAID-5 performance without reducing data protection.

12) RAID-53 (or RAID-5+3): This type uses striping (in RAID-0 style) for RAID-3's virtual disk blocks. This offers higher performance than RAID-3 but at much higher cost. RAID is a way of storing the same data in different places (thus, redundantly) on multiple hard disks. By placing data on multiple disks, I/O (input/output) operations can overlap in a balanced way, improving performance. Since multiple disks increases the mean time between failures (MTBF), storing data redundantly also increases fault tolerance.

A RAID appears to the operating system to be a single logical hard disk. RAID employs the technique of disk striping, which involves partitioning each drive's storage space into units ranging from a sector (512 bytes) up to several megabytes. The stripes of all the disks are interleaved and addressed in order.

In a single-user system where large records, such as medical or other scientific images, are stored, the stripes are typically set up to be small (perhaps 512 bytes) so that a single record spans all disks and can be accessed quickly by reading all disks at the same time.

In a multi-user system, better performance requires establishing a stripe wide enough to hold the typical or maximum size record. This allows overlapped disk I/O across drives.